Контрольні точки

Complete environment configuration tasks

/ 30

Download lab assets

/ 20

Configure and execute the Spark code

/ 30

Confirm that the data is loaded into BigQuery

/ 20

Use Dataproc Serverless for Spark to Load BigQuery

Overview

Dataproc Serverless is a fully-managed service that makes it easier to run open source data processing and analytics workloads without the need to manage infrastructure or manually tune workloads.

Dataproc Serverless for Spark provides an optimized environment designed to easily move existing Spark workloads to Google Cloud.

In this lab you will run a Batch workload on Dataproc Serverless environment. The workload will use a Spark template to process an Avro file to create and load a BigQuery table.

What you'll do

- Configure the environment

- Download lab assets

- Configure and execute the Spark code

- View data in BigQuery

Setup

Before you click the Start Lab button

This Qwiklabs hands-on lab lets you do the lab activities yourself in a real cloud environment, not in a simulation or demo environment. It does so by giving you new, temporary credentials that you use to sign in and access Google Cloud for the duration of the lab.

What you need

To complete this lab, you need:

- Access to a standard internet browser (Chrome browser recommended).

- Time to complete the lab.

How to start your lab and sign in to the Console

-

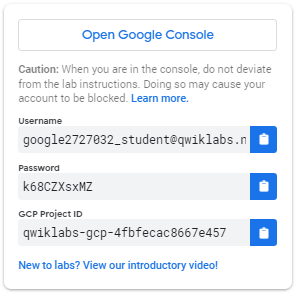

Click the Start Lab button. If you need to pay for the lab, a pop-up opens for you to select your payment method. On the left is a panel populated with the temporary credentials that you must use for this lab.

-

Copy the username, and then click Open Google Console. The lab spins up resources, and then opens another tab that shows the Choose an account page.

Note: Open the tabs in separate windows, side-by-side. -

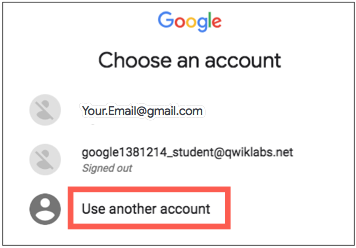

On the Choose an account page, click Use Another Account. The Sign in page opens.

-

Paste the username that you copied from the Connection Details panel. Then copy and paste the password.

- Click through the subsequent pages:

- Accept the terms and conditions.

- Do not add recovery options or two-factor authentication (because this is a temporary account).

- Do not sign up for free trials.

After a few moments, the Cloud console opens in this tab.

Activate Google Cloud Shell

Google Cloud Shell is a virtual machine that is loaded with development tools. It offers a persistent 5GB home directory and runs on the Google Cloud.

Google Cloud Shell provides command-line access to your Google Cloud resources.

-

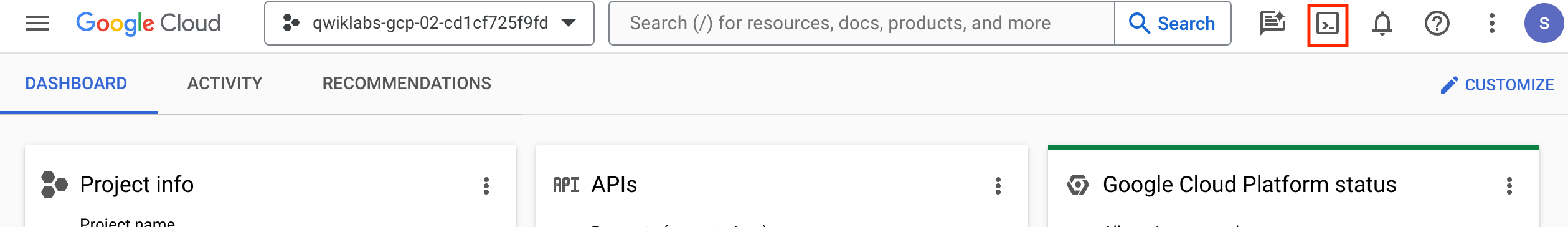

In Cloud console, on the top right toolbar, click the Open Cloud Shell button.

-

Click Continue.

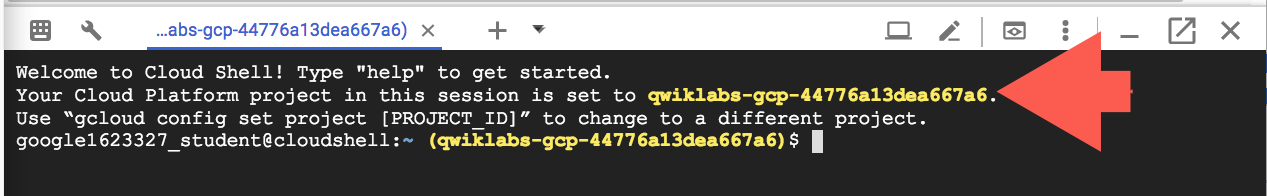

It takes a few moments to provision and connect to the environment. When you are connected, you are already authenticated, and the project is set to your PROJECT_ID. For example:

gcloud is the command-line tool for Google Cloud. It comes pre-installed on Cloud Shell and supports tab-completion.

- You can list the active account name with this command:

Output:

Example output:

- You can list the project ID with this command:

Output:

Example output:

Task 1. Complete environment configuration tasks

First, you're going to perform a few enivronment configuration tasks to support the execution of a Dataproc Serverless workload.

- In the Cloud Shell, run the following command to enable Private IP Access:

- Use the following command to create a new Cloud Storage bucket as a staging location:

- Use the following command to create a new Cloud Storage bucket as temporary location for BigQuery while it creates and loads a table:

- Create a BQ dataset to store the data.

Task 2. Download lab assets

Next, you're going to download a few assets necessary to complete the lab into lab provided Compute Engine VM. You will perform the rest of the steps in the lab inside the Compute Engine VM.

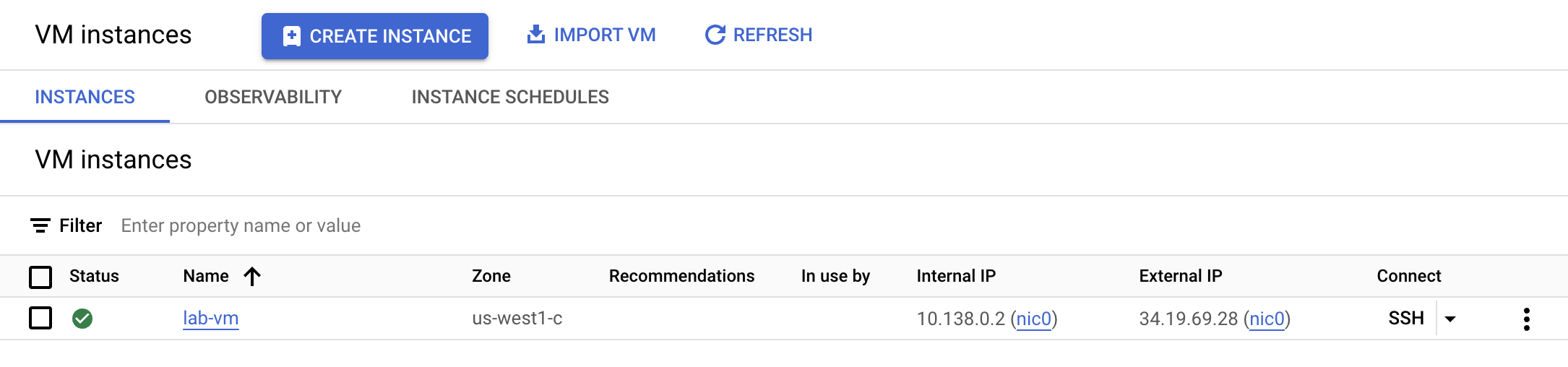

- From the Navigation menu click on Compute Engine. Here you'll see a linux VM provisioned for you. Click the SSH button next to the lab-vm instance.

- At the VM terminal prompt, download the Avro file that will be processed for storage in BigQuery.

- Next, move the Avro file to the staging Cloud Storage bucket you created earlier.

- Download an archive containing the Spark code to be executed against the Serverless environment.

- Extract the archive.

- Change to the Python directory.

Task 3. Configure and execute the Spark code

Next, you're going to set a few environment variables into VM instance terminal and execute a Spark template to load data into BigQuery.

- Set the following environment variables for the Dataproc Serverless environment.

- Run the following code to execute the Spark Cloud Storage to BigQuery template to load the Avro file in to BigQuery.

Task 4. Confirm that the data was loaded into BigQuery

Now that you have successfully executed the Spark template, it is time to examine the results in BigQuery.

- View the data in the new table in BigQuery.

- The query should return results similiar to the following:

Example output:

Congratulations!

You successfully executed a Batch workload using Dataproc Serverless for Spark to load an Avro file into a BigQuery table.

Copyright 2022 Google LLC All rights reserved. Google and the Google logo are trademarks of Google LLC. All other company and product names may be trademarks of the respective companies with which they are associated.