Checkpoints

Check Firestore Database Deployment

/ 30

Check Cloud Run Functions application Deployment

/ 30

Check App Engine application Deployment

/ 40

Deploy Go Apps on Google Cloud Serverless Platforms

GSP702

Overview

Go is an open source programming language by Google that makes it easy to build fast, reliable, and efficient software at scale. In this lab you explore the basics of Go by deploying a simple Go app to Cloud Run Functions and App Engine. You then use the Go app to access data in BigQuery and Firestore.

What you'll do

In this lab, you perform the following:

- Set up your Firestore Database and import data

- Get an introduction to the power of Cloud Build

- Explore data in BigQuery and Firestore

- Deploy a Go app to App Engine and Cloud Run Functions

- Examine the Go app code

- Test the app on the each of the platforms

Setup and requirements

Before you click the Start Lab button

Read these instructions. Labs are timed and you cannot pause them. The timer, which starts when you click Start Lab, shows how long Google Cloud resources will be made available to you.

This hands-on lab lets you do the lab activities yourself in a real cloud environment, not in a simulation or demo environment. It does so by giving you new, temporary credentials that you use to sign in and access Google Cloud for the duration of the lab.

To complete this lab, you need:

- Access to a standard internet browser (Chrome browser recommended).

- Time to complete the lab---remember, once you start, you cannot pause a lab.

How to start your lab and sign in to the Google Cloud console

-

Click the Start Lab button. If you need to pay for the lab, a pop-up opens for you to select your payment method. On the left is the Lab Details panel with the following:

- The Open Google Cloud console button

- Time remaining

- The temporary credentials that you must use for this lab

- Other information, if needed, to step through this lab

-

Click Open Google Cloud console (or right-click and select Open Link in Incognito Window if you are running the Chrome browser).

The lab spins up resources, and then opens another tab that shows the Sign in page.

Tip: Arrange the tabs in separate windows, side-by-side.

Note: If you see the Choose an account dialog, click Use Another Account. -

If necessary, copy the Username below and paste it into the Sign in dialog.

{{{user_0.username | "Username"}}} You can also find the Username in the Lab Details panel.

-

Click Next.

-

Copy the Password below and paste it into the Welcome dialog.

{{{user_0.password | "Password"}}} You can also find the Password in the Lab Details panel.

-

Click Next.

Important: You must use the credentials the lab provides you. Do not use your Google Cloud account credentials. Note: Using your own Google Cloud account for this lab may incur extra charges. -

Click through the subsequent pages:

- Accept the terms and conditions.

- Do not add recovery options or two-factor authentication (because this is a temporary account).

- Do not sign up for free trials.

After a few moments, the Google Cloud console opens in this tab.

Activate Cloud Shell

Cloud Shell is a virtual machine that is loaded with development tools. It offers a persistent 5GB home directory and runs on the Google Cloud. Cloud Shell provides command-line access to your Google Cloud resources.

- Click Activate Cloud Shell

at the top of the Google Cloud console.

When you are connected, you are already authenticated, and the project is set to your Project_ID,

gcloud is the command-line tool for Google Cloud. It comes pre-installed on Cloud Shell and supports tab-completion.

- (Optional) You can list the active account name with this command:

- Click Authorize.

Output:

- (Optional) You can list the project ID with this command:

Output:

gcloud, in Google Cloud, refer to the gcloud CLI overview guide.

What is Go?

Go (golang) is a general-purpose language designed with systems programming in mind. It is strongly typed and garbage-collected and has first class support for concurrent programming. Programs are constructed from packages, whose properties allow efficient management of dependencies.

Unlike Python and Javascript, Go is compiled not interpreted. Go source code is compiled into machine code before execution. As a result, Go is typically faster and more efficient than interpreted languages and requires no installed runtime like Node, Python, or JDK to execute.

Serverless platforms

Serverless computing enables developers to focus on writing code without worrying about infrastructure. It offers a variety of benefits over traditional computing, including zero server management, no up-front provisioning, auto-scaling, and paying only for the resources used. These advantages make serverless ideal for use cases like stateless HTTP applications, web, mobile, IoT backends, batch and stream data processing, chatbots, and more.

Go is perfect for cloud applications because of its efficiency, portability, and how easy it is to learn. This lab shows you how to use Cloud Build to deploy a Go app to the Cloud Run Functions and App Engine, all Google serverless platforms:

- Cloud Run Functions

- App Engine

Cloud Build

Cloud Build is a service that executes your builds on Google Cloud infrastructure. Cloud Build can import source code from Cloud Storage, Cloud Source Repositories, GitHub, or Bitbucket, execute a build to your specifications, and produce artifacts such as Docker containers.

Cloud Build executes your build as a series of build steps, where each build step is run in a Docker container. A build step can do anything that a container can do irrespective of the environment. For more information, refer to the Cloud Build documentation.

Cloud Run Functions

Cloud Run Functions is Google Cloud's event-driven serverless compute platform. Because Go compiles to a machine code binary, has no dependent frameworks to start, and does not require an interpreter, Go cold start time is between 80 and 1400ms. This means that the applications and services can remain dormant without costing anything, and then startup and start cold serving traffic in 1400ms or less. After this initial start, the function serves traffic instantly. This factor alone makes Go an ideal language for a Cloud Run Functions deployment.

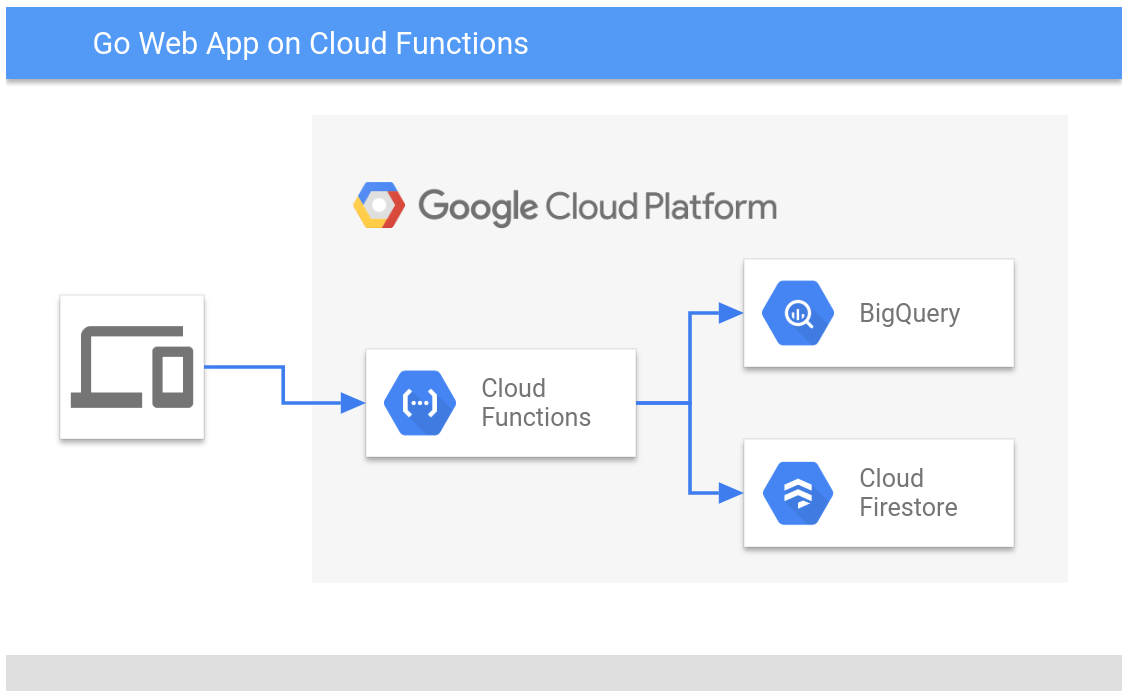

Architecture

When you deploy your sample Go app, Google Cloud Data Drive, to Cloud Run Functions, the data flow architecture looks like this:

App Engine

The App Engine standard environment is based on container instances running on Google's infrastructure. Containers are preconfigured with one of several available runtimes.

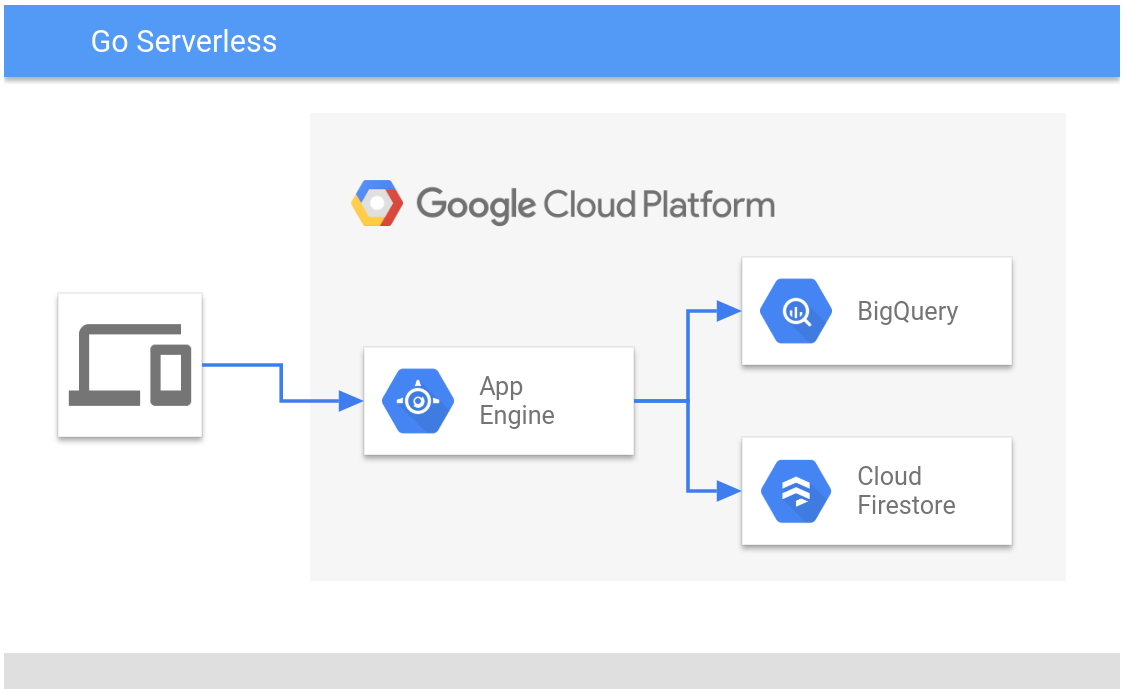

Architecture

When you deploy your sample Go app, Google Cloud Data Drive, to App Engine, the data flow architecture looks like this:

Google Cloud Data Drive source code

In this lab you deploy a simple app called Google Cloud Data Drive. Google developed and open sourced this app to extract data from Google Cloud quickly. The Google Cloud Data Drive app is one of a series of tools that demonstrates effective usage patterns for using Cloud API's and services.

The Google Cloud Data Drive Go app exposes a simple composable URL path to retrieve data in JSON format from supported Google Cloud data platforms. The application currently supports BigQuery and Firestore, but has a modular design that can support any number of data sources.

These HTTP URL patterns are used to get data from Google Cloud running Google Cloud Data Drive.

-

Firestore :

[SERVICE_URL]/fs/[PROJECT_ID]/[COLLECTION]/[DOCUMENT] -

BigQuery:

[SERVICE_URL]/bq/[PROJECT_ID]/[DATASET]/[TABLE]

Where:

|

Parameter |

Description |

|

[SERVICE URL] |

The base URL for the application from App Engine or Cloud Run Functions. For App Engine this will look like |

|

[PROJECT ID] |

The Project ID of the Firestore Collection or BigQuery Dataset you want to access. You find the Project ID in the left panel of your lab. |

|

[COLLECTION] |

The Firestore Collection ID ( |

|

[DOCUMENT] |

The Firestore document you would like to return ( |

|

[DATASET] |

The BigQuery Dataset name ( |

|

[TABLE] |

The BigQuery Table name ( |

curl to test the app, you can create the URLs yourself for an added functionality test in a browser.Task 1. Setup your environment

- In Cloud Shell, enter the following command to create an environment variable to store the Project ID to use later in this lab:

- Get the sample code for this lab by copying from Google Cloud Storage (GCS):

Prepare your databases

This lab uses sample data in BigQuery and Firestore to test your Go app.

BigQuery database

BigQuery is a serverless, future proof data warehouse with numerous features for machine learning, data partitioning, and segmentation. This lets you analyze gigabytes to petabytes of data using ANSI SQL at blazing-fast speeds, and with zero operational overhead.

The BigQuery dataset is a view of California zip codes and was created for you when the lab started.

Firestore database

Firestore, is a serverless document database, with super fast document lookup and real-time eventing features. It is also capable of a 99.999% SLA. To use data in Firestore to test your app, you must initialize Firestore in native mode and then import the sample data.

A Firestore native mode database instance has been created.

- In the Cloud Console, click Navigation menu > Firestore to open Firestore in the Console.

Wait for the Firestore Database instance to initialize. This process also initializes App Engine in the same region, which allows you to deploy the application to App Engine without first creating an App Engine instance.

- In Cloud Shell, launch a Firestore import job that provides sample Firestore data for the lab:

This import job loads a Cloud Firestore backup of a collection called symbols into the $PROJECT_ID-firestore storage bucket.

This import job takes up to 5 minutes to complete. Start the next section while you wait.

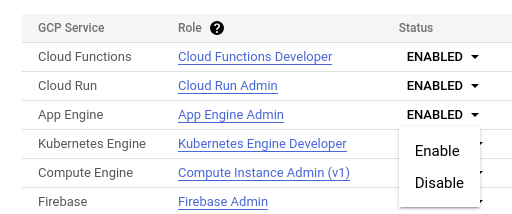

Configure permissions for Cloud Build

Cloud Build is a service that executes your builds on Google Cloud infrastructure. By default, Cloud Build does not have sufficient permissions to deploy applications to

- App Engine

- Cloud Run Functions

You must enable these services before you can use Cloud Build to deploy the Google Cloud Data Drive app.

- In the Console, click Navigation menu > Cloud Build > Settings.

- Set the Status for Cloud Functions to Enable.

- When prompted, click Grant access to All Service Accounts.

- Set the Status for Cloud Run to Enable.

- Set the Status for App Engine to Enable.

Task 2. Deploy to Cloud Run Functions

Cloud Run Functions is Google Cloud's event-driven serverless compute platform. When you combine Go and Cloud Run Functions, you get the best serverless has to offer in terms of fast spin up times and infinite scale so your application can have the best event driven response times possible.

Have a look at the source code and see how to reuse the Google Cloud Data Drive source code in a Cloud Run Function.

Review the main function

- At the start of the

mainfunction inDIY-Tools/gcp-data-drive/cmd/webserver/main.go, you tell the web server to send all HTTP requests to thegcpdatadrive.GetJSONDataGo function.

- View the

mainfunction inmain.goon GitHub.

In a Cloud Run Function, the main.go is not used, instead the Cloud Run Function runtime is configured to send HTTP requests directly to the gcpdatadrive.GetJSONData Go function that is defined in the DIY-Tools/gcp-data-drive/gcpdatadrive.go file.

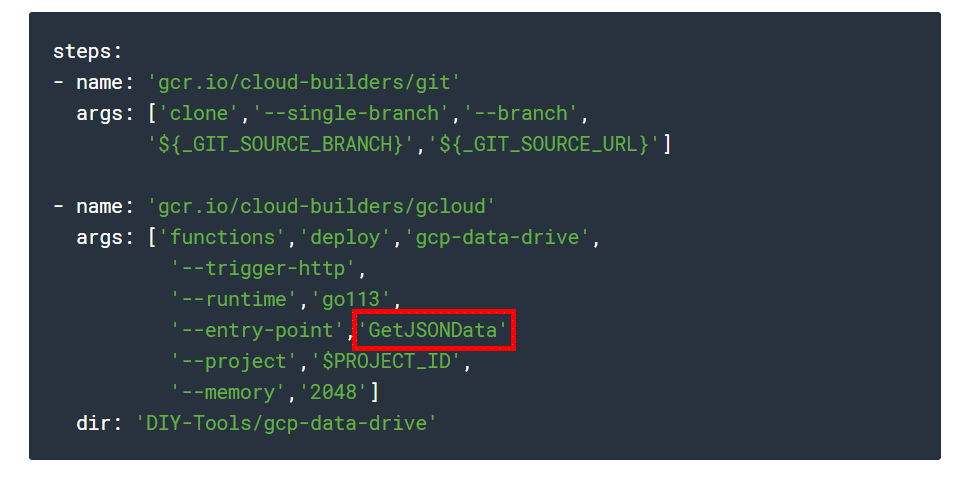

- You can see how this is done by looking at how the Google Cloud Data Drive application is deployed to Cloud Run Functions using Cloud Build with

cloudbuild_gcf.yaml:

- View the

cloudbuild_gcf.yamlon GitHub.

These Cloud Build steps are also similar to those used to deploy the application to Cloud Run, but in this case, you deploy the application to Cloud Run Functions using the gcloud functions deploy command.

Notice that the Cloud Run Functions --entrypoint parameter is used to specify the GetJSONData function and not the main function in the main Go package that is used when it is deployed to App Engine or Cloud Run.

Deploy the Google Cloud Data Drive app

- In Cloud Shell, change to the directory for the application that you cloned from GitHub:

- Enter the command below to fetch the project details to grant the

pubsub.publisherpermission to service account.

- Deploy the Google Cloud Data Drive app to Cloud Run Functions with Cloud Build:

- Enter the following command to allow unauthorized access to the Google Cloud Data Drive Cloud Run Function:

If asked, Would you like to run this command and additionally grant [allUsers] permission to invoke function [gcp-data-drive] (Y/n)?, enter Y.

- Store the Cloud Run Functions HTTP Trigger URL in an environment variable:

- Use

curlto call the application to query data from the Firestoresymbolscollection in your project:

This responds with the contents of a JSON file containing the values from the symbols collection in your project .

- Use

curlto call the application to query data from the BigQuerypublicviews.ca_zip_codestable in your lab project:

This responds with the contents of a JSON file containing the results of the BigQuery SQL statement SELECT * FROM publicviews.ca_zip_codes;.

Task 3. Additional Cloud Run Functions triggers

Cloud Run Functions has an event driven architecture. The app you deployed uses an HTTP request as an event. Explore the code of another Go app that takes in a different event type. The function is triggered on a Firestore write event.

The Go source code below is adapted from the Go Code sample guide:

View the example source code on GitHub.

This example contains code that can be used to deploy Cloud Run Functions that handle Firestore events, instead of the HTTP request trigger used in the lab sample application. You register this function with a Cloud Firestore event trigger using DoSomeThingOnWrite as the Cloud Run Functions entrypoint.

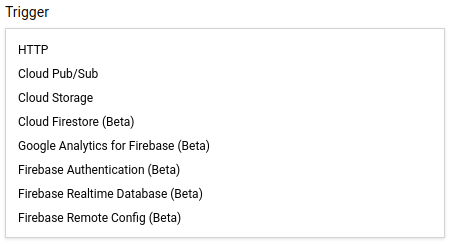

Cloud Run Functions currently support the following event triggers.

The example above is a simple case, but you can imagine the potential. Simple Go Cloud Run Functions do tasks that used to come with the burden of managing an operating system. For example, you can use a function like this to run Data Loss Prevention (DLP) to sanitize data when a customer writes something to Cloud Firestore via a mobile app.

The Cloud Run Function could rewrite a summary report to Firestore for web consumption based on a pub/sub event. Any number of small processes that are event based are good candidates for Go Cloud Run Functions. Best of all, there are zero servers to patch.

Task 4. Deploy to App Engine

App Engine is well suited for running Go applications. App Engine is a serverless compute platform that is fully managed to scale up and down as workloads fluctuate. Go applications are compiled to a single binary executable file during deployment. Go cold startup times for applications are often between 80 and 1400 in milliseconds and when running, App Engine can horizontally scale to meet the most demanding global scale workloads in seconds.

Review the Cloud Build YAML config file

The Cloud Build YAML file, DIY-Tools/gcp-data-drive/cloudbuild_appengine.yaml, shown below contains the Cloud Build step definitions that deploy your application to App Engine. You use this file to deploy the application to App Engine.

The first step executes the git command to clone the source repository that contains the application. This step is parameterized to allow you to easily switch between application branches.

The second step executes the sed command to replace the runtime: go113 line in the app.yaml file with runtime: go121. This is necessary because the Go 1.13 runtime is deprecated and will be removed in the future. Note that this is just a patch to keep the app running. You should update the app to use the latest Go runtime in your own projects.

The third step executes the gcloud app deploy command to deploy the application to App Engine.

As with the other examples, you could manually deploy this app using gcloud app deploy, but Cloud Build allows you to offload this to Google infrastructure, for example if you want to create a serverless CI/CD pipeline.

View cloudbuild_appengine.yaml on GitHub.

Deploy the Google Cloud Data Drive app

- Still in

DIY-Tools/gcp-data-drive, deploy the Go webserver app to App Engine using Cloud Build:

Deployment takes a few minutes to complete.

- Store the App Engine URL in an environment variable to use in the command to call the app:

target url in the output. - Use

curlto call the application running on App Engine to query data from Firestore:

This responds with the contents of a JSON file containing three values from the symbols collection in your project.

- Use

curlto call the app running on App Engine to query data from BigQuery:

This responds with the contents of a JSON file containing the results of the BigQuery SQL statement SELECT * FROM publicviews.ca_zip_codes;.

Increase the load

Increase the load to see what happens.

- Use the nano editor to create a simple shell script to put some load on the application:

- Type or paste the following script into the editor:

- Press Ctrl+X, Y, and then Enter to save the new file.

- Make the script executable:

- Run the load test:

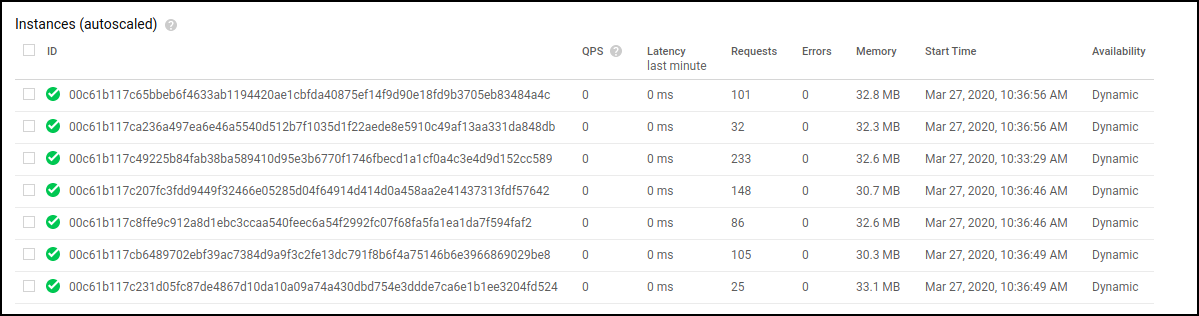

- In the Console, click Navigation menu > App Engine > Instances.

The Instances window opens and shows a summary of requests/second and a list of instances spawned when you ran the load test in Cloud Shell. Notice how App Engine automatically created additional app instances and distributed the incoming HTTP traffic.

- In Cloud Shell, press Ctrl+C to end the load test if it is still running.

Congratulations!

In this self-paced lab, you explored how to use the Go programming language to deploy to the all Google Cloud serverless compute platforms. The following are key takeaways:

- The Go programming language has awesome profitability and efficiency features that make it an excellent fit for modern cloud and on prem applications.

- The same Go code base can be deployed to all Google Cloud serverless, container based, and Anthos compute platforms without modification.

- Cloud Build (also serverless) is a key part of a cloud based CI/CD pipeline to provide consistency in delivering to your application lifecycles.

- Cloud Run Functions, and App Engine are simple serverless platforms that operate at Google scale and are easy to deploy to.

- Google Cloud Data Drive is an open source data extraction library that I can use in my existing Google Cloud environment.

Next steps / Learn more

- To learn more about Go, see The Go Programming Language.

- Check out Go on GitHub.

Google Cloud training and certification

...helps you make the most of Google Cloud technologies. Our classes include technical skills and best practices to help you get up to speed quickly and continue your learning journey. We offer fundamental to advanced level training, with on-demand, live, and virtual options to suit your busy schedule. Certifications help you validate and prove your skill and expertise in Google Cloud technologies.

Manual Last Updated November 05, 2024

Lab Last Tested November 05, 2024

Copyright 2024 Google LLC All rights reserved. Google and the Google logo are trademarks of Google LLC. All other company and product names may be trademarks of the respective companies with which they are associated.