Checkpoint

Create Infrastructure for the Virtual Machine

/ 30

Create a Swap File on the VM and Mount the Attached Disk on the VM

/ 20

Create a User on the VM and Install the Ethereum software

/ 20

Start Geth as a background process and use the snap sync mode

/ 10

Configure Cloud Operations

/ 20

Running a Dedicated Ethereum RPC Node in Google Cloud

GSP1116

Overview

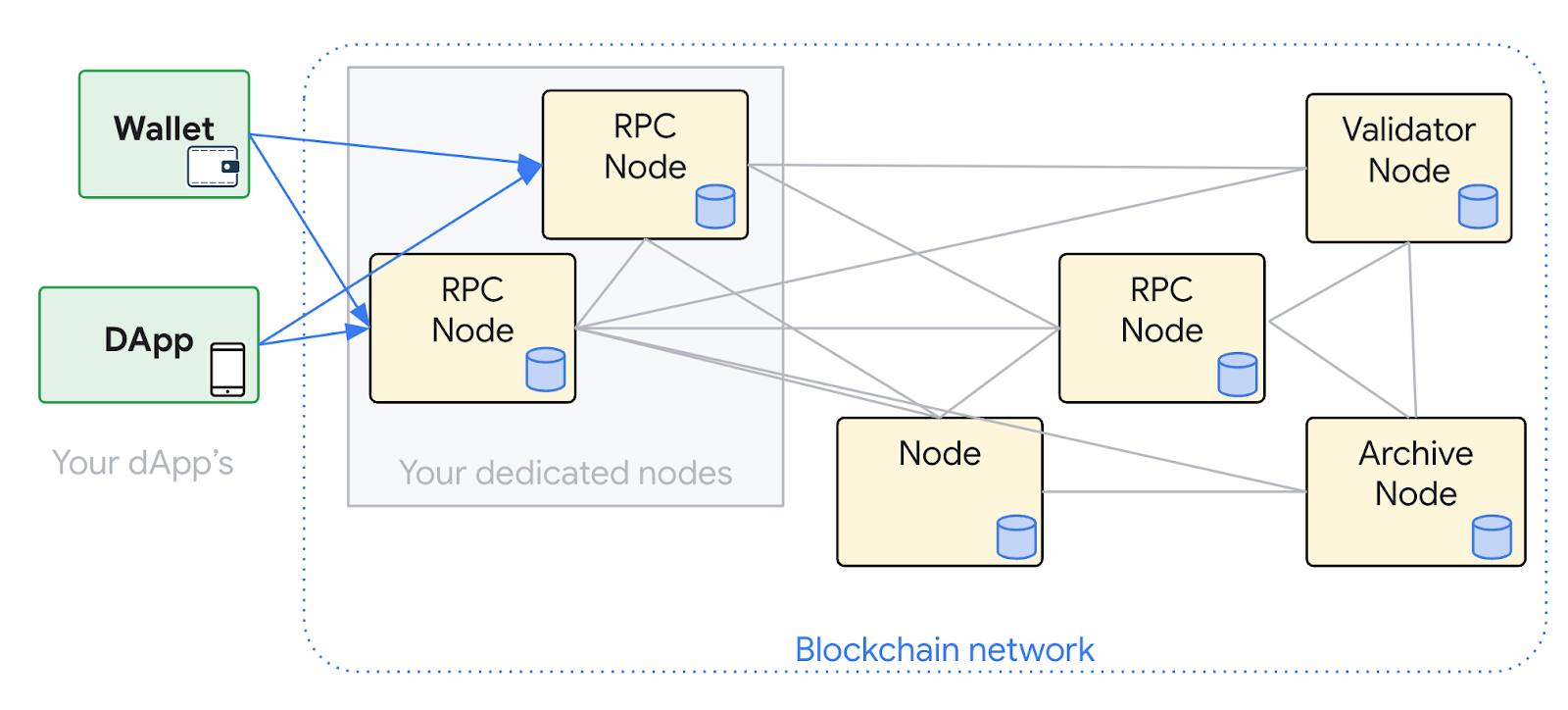

Hosting your own blockchain nodes may be required for security, compliance, performance or privacy. And a decentralized, resilient and sustainable network is a critical foundation for any blockchain protocol. Web3 developers can use Google Cloud's Blockchain Node Engine, a fully managed node-hosting solution for Web3 development. Organizations can also configure and manage their own nodes in Google Cloud. As the trusted partner for Web3 infrastructure, Google Cloud offers secure, reliable, and scalable node hosting infrastructure. To learn more about Hosting nodes on Google Cloud, visit blog post Introducing Blockchain Node Engine: fully managed node-hosting for Web3 development.

To learn more about technical considerations and architectural decisions you need to make when you deploy self-managed blockchain nodes to the cloud; please visit blog post Google Cloud for Web3.

In this lab, you create a virtual machine (VM) to deploy an Ethereum RPC node. An Ethereum RPC node is capable of receiving blockchain updates from the network and processing RPC API requests. You use a e2-standard-4 machine type that includes a 20-GB boot disk, 4 virtual CPUs (vCPU) and 16 GB of RAM. To ensure there is enough room for the blockchain data, you attach a 200GB SSD disk to the instance. You use Ubuntu 20.04 and deploy two services: Geth, the "execution layer" and Lighthouse, the "consensus layer". Both of these services work together to form an Ethereum RPC node.

Objectives

In this lab, you learn how to perform the following tasks:

- Create a Compute Engine instance with a persistent disk.

- Configure a static IP address and network firewall rules.

- Schedule regular backups.

- Deploy Geth, the execution layer for Ethereum.

- Deploy Lighthouse, the consensus layer for Ethereum.

- Make Ethereum RPC calls.

- Configure Cloud Logging.

- Configure Cloud Monitoring.

- Configure uptime checks.

Setup and requirements

Before you click the Start Lab button

Read these instructions. Labs are timed and you cannot pause them. The timer, which starts when you click Start Lab, shows how long Google Cloud resources will be made available to you.

This hands-on lab lets you do the lab activities yourself in a real cloud environment, not in a simulation or demo environment. It does so by giving you new, temporary credentials that you use to sign in and access Google Cloud for the duration of the lab.

To complete this lab, you need:

- Access to a standard internet browser (Chrome browser recommended).

- Time to complete the lab---remember, once you start, you cannot pause a lab.

How to start your lab and sign in to the Google Cloud console

-

Click the Start Lab button. If you need to pay for the lab, a pop-up opens for you to select your payment method. On the left is the Lab Details panel with the following:

- The Open Google Cloud console button

- Time remaining

- The temporary credentials that you must use for this lab

- Other information, if needed, to step through this lab

-

Click Open Google Cloud console (or right-click and select Open Link in Incognito Window if you are running the Chrome browser).

The lab spins up resources, and then opens another tab that shows the Sign in page.

Tip: Arrange the tabs in separate windows, side-by-side.

Note: If you see the Choose an account dialog, click Use Another Account. -

If necessary, copy the Username below and paste it into the Sign in dialog.

{{{user_0.username | "Username"}}} You can also find the Username in the Lab Details panel.

-

Click Next.

-

Copy the Password below and paste it into the Welcome dialog.

{{{user_0.password | "Password"}}} You can also find the Password in the Lab Details panel.

-

Click Next.

Important: You must use the credentials the lab provides you. Do not use your Google Cloud account credentials. Note: Using your own Google Cloud account for this lab may incur extra charges. -

Click through the subsequent pages:

- Accept the terms and conditions.

- Do not add recovery options or two-factor authentication (because this is a temporary account).

- Do not sign up for free trials.

After a few moments, the Google Cloud console opens in this tab.

In this lab, you use the following tools:

- Ubuntu 20.04

- Geth

- Lighthouse

- Curl

- Gcloud

Task 1. Create infrastructure for the Virtual Machine

Create a public static IP address, firewall rule, service account, snapshot schedule and a virtual machine with the new IP address. This is the infrastructure that Ethereum is deployed to.

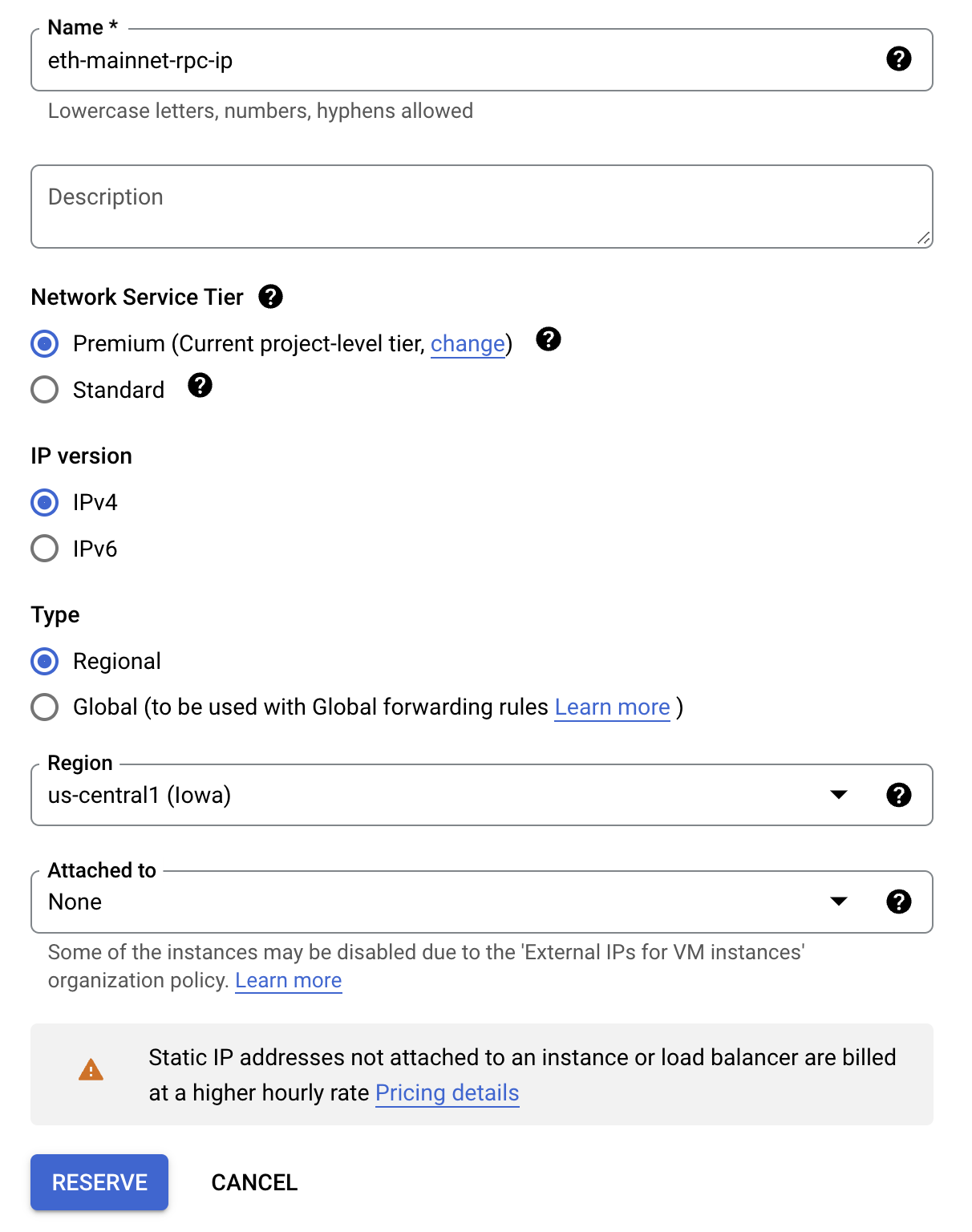

Create a public static IP address

In this section, you set up the public IP address used for the virtual machine.

- From the Navigation menu, under the VPC Network section, click IP Addresses.

- Click on RESERVE EXTERNAL STATIC IP ADDRESS in the action bar to create the static IP address.

- For the static address configuration, use the following:

| Property | Value (type or select) |

|---|---|

| Name | eth-mainnet-rpc-ip |

| Network Service Tier | Premium |

| IP version | IPv4 |

| Type | Regional |

| Region | |

| Attached to | None |

- Click RESERVE.

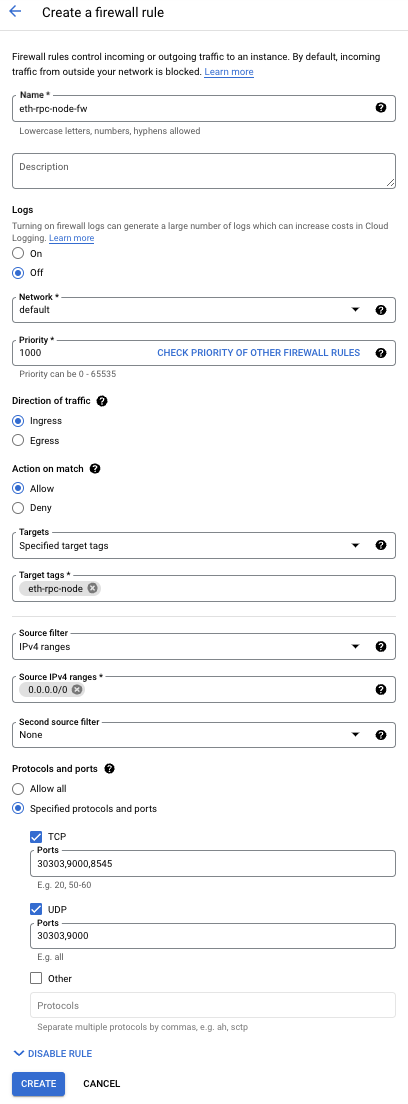

Create a firewall rule

Create Firewall rules so that the VM can communicate on designated ports.

Geth P2P communicates on TCP and UDP on port 30303. Lighthouse P2P communicates on TCP and UDP on port 9000. Geth RPC uses TCP 8545.

- From the Navigation menu, under the VPC Network section, click Firewall.

- Click on CREATE FIREWALL RULE in the action bar to create the firewall rules.

- For the firewall configuration, use the following:

| Property | Value (type or select) |

|---|---|

| Name | eth-rpc-node-fw |

| Logs | Off |

| Network | default |

| Priority | 1000 |

| Direction | Ingress |

| Action on match | Allow |

| Targets | Specified target tags |

| Target Tags | eth-rpc-node (hit enter after typing in value) |

| Source Filter | IPv4 ranges |

| Source IPv4 ranges | 0.0.0.0/0 (hit enter after typing in value) |

| Specified protocols and ports: | TCP: 30303, 9000, 8545 UDP: 30303, 9000 |

- Click CREATE.

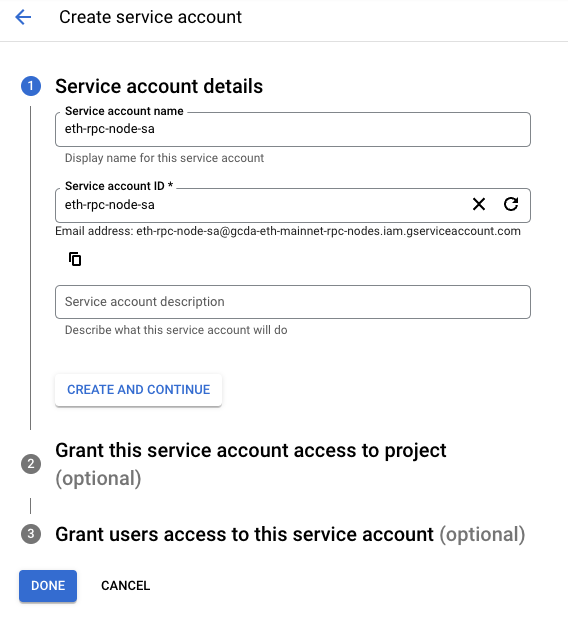

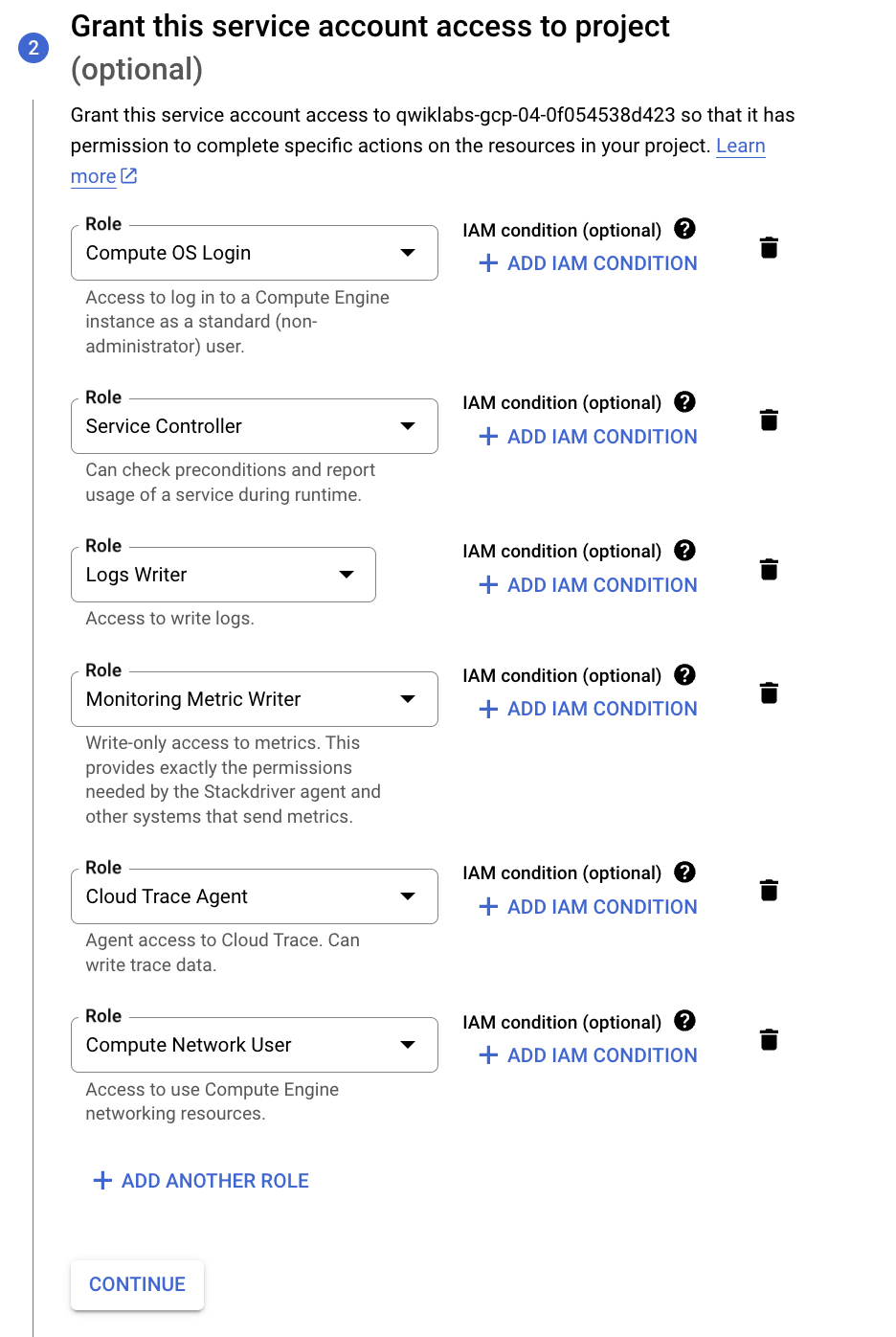

Create a service account

Create a service account for the VM to operate under.

- From the Navigation Menu, under the IAM & Admin section, click Service Accounts.

- Click on CREATE SERVICE ACCOUNT in the action bar to create the service account.

- For the service account configuration, use the following:

| Property | Value (type or select) |

|---|---|

| Service account name | eth-rpc-node-sa |

| Service account ID | eth-rpc-node-sa |

- Click CREATE AND CONTINUE.

- Add the following roles:

| Property | Value (type or select) |

|---|---|

| Roles |

Compute OS Login, Service Controller, Logs Writer, Monitoring Metric Writer, Cloud Trace Agent, Compute Network User

|

- Click CONTINUE.

- Click DONE.

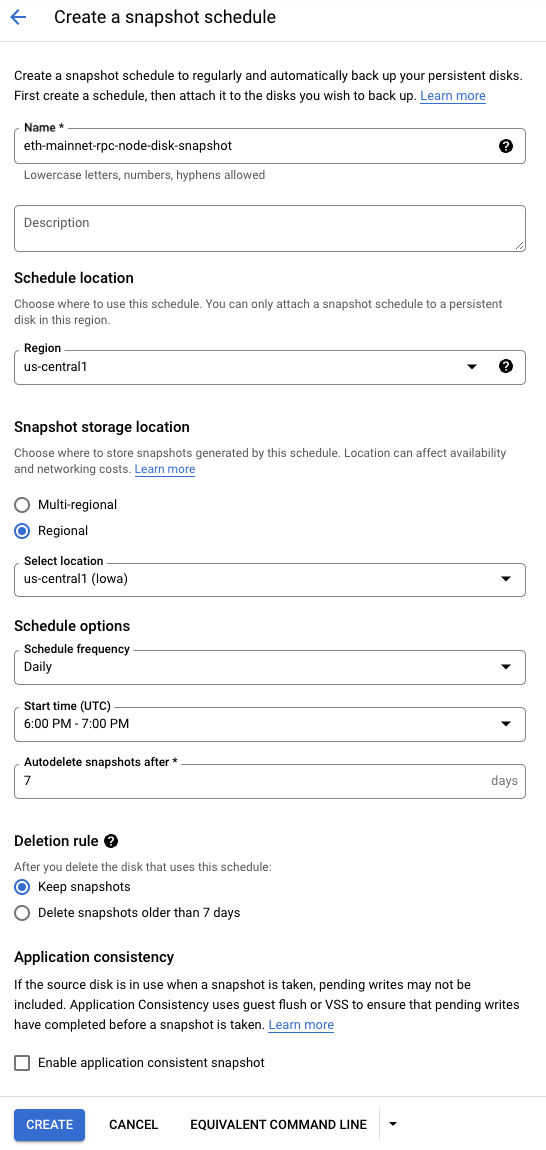

Create a snapshot schedule

In this section, you set up the snapshot schedule used for the virtual machine's attached disk, which contains the blockchain data. This will backup the chain data.

- From the Navigation Menu, under the Compute Engine section, click Snapshots.

- Click CREATE SNAPSHOT SCHEDULE to create the snapshot schedule.

- For the snapshot schedule, use the following:

| Property | Value (type or select) |

|---|---|

| Name | eth-mainnet-rpc-node-disk-snapshot |

| Region | |

| Regional | |

| Schedule Options | Schedule frequency: Daily, Start time (UTC): 6:00 PM - 7:00 PM, Autodelete snapshots after: 7

|

| Deletion rule | Keep snapshots |

- Click CREATE.

Create a Virtual Machine

In this section, you set up the virtual machine used for the Ethereum deployment.

-

From the Navigation Menu, under the Compute Engine section, click VM Instances.

-

Click on Create Instance to create the VM.

-

In the Machine configuration

Enter the value for the following field:

Property Value (type or select) Name eth-mainnet-rpc-node Region Zone Series E2Machine type e2-standard-4 -

Click OS and Storage

Click Change and select the following values:

Property Value(type or select) Operating System Ubuntu Version Ubuntu 20.04 LTS (x86/64) Boot disk type SSD Size 50GB Click SAVE.

In Additional storage and VM backups, click Add New Disk

Property Value(type or select) Name eth-mainnet-rpc-node-disk Disk source type Blank disk Disk Type SSD Size 200GB (Alternatively 2,000GB for larger installations) Snapshot schedule eth-mainnet-rpc-node-disk-snapshot - Click Save.

-

Click Networking

Property Value (type or select) Network tags eth-rpc-node (hit enter after typing in value - matches with firewall setting) -

In Network Interfaces click default:

-

Under Network interface card select gVNIC

-

External IPv4 address:

eth-mainnet-rpc-ip(select the static IP address created earlier)Click DONE.

-

-

Click Security

-

Under Identity and API Access, SELECT the service account

eth-rpc-node-sa. -

Click CREATE.

-

Click Check my progress to verify the objective.

Task 2. Setup and Installation on the Virtual Machine

Now, ssh into the VM and run the commands to install the software.

SSH into the VM

- From the navigation menu, under the Compute Engine section, click VM Instances.

- On the same row as eth-mainnet-rpc-node, click SSH to open a ssh window.

- If prompted Allow SSH-in-browser to connect to VMs, click Authorize.

Create a Swap File on the VM

To give the processes extra RAM, you'll create a swap file. This is to increase the amount of RAM that the VM can use if it needs to.

- To create a 25GB swap file, execute the following command:

Note that the first command will take a little time to execute.

- Update the permissions on the swap file:

- Designate the file to be used as a swap partition:

- Add the swap file configuration to /etc/fstab, which allows the mounted drive to be recognized upon reboot:

- Enable the swap file:

- Confirm the swap has been recognized:

You should see a message with a line similar to this:

Output:

Mount the attached disk on the VM

During the VM setup, you created an attached disk. The VM will not automatically recognize this. It needs to be formatted and "mounted" before it can be used.

- View the attached disk. You should see an entry for sdb with the size as 200GB:

- Format the attached disk:

- Create the folder and mount the attached disk:

- Update the permissions for the folder so processes can read/write to it:

- Retrieve the disk ID of the mounted drive to confirm that the drive was mounted:

You should see a message similar to the one displayed in the output box below:

Output:

- Retrieve the disk ID of the mounted disk and append it to the /etc/fstab file. This file ensures that the drive will still be mounted if the VM restarts.

- Run the df command to confirm that the disk has been mounted, formatted and the correct size has been allocated:

You should see a message with a line similar to this, which shows the new mounted volume and the size:

Output:

If you need to resize the disk later, follow these instructions.

Click Check my progress to verify the objective.

Create a user on the VM

Create a user to run the processes under.

- To create a user named ethereum, execute the following commands:

- Switch to the ethereum user:

- Start the bash command line:

- Change to the ethereum user's home folder:

Install the Ethereum software

- Update the Operating System:

- Install common software:

- Install the Google Cloud Ops Agent:

- Remove the script file that was downloaded:

- Create folders for the logs and chaindata for the Geth and Lighthouse clients:

- Install Geth from the package manager:

- Confirm that Geth is available and is the latest version:

You should see a message with a line similar to this:

Output:

- Download the Lighthouse client. This script will download the latest release from GitHub.

- Extract the lighthouse tar file and remove:

- Move the lighthouse binary to the /usr/bin folder and update the permissions:

- Confirm that lighthouse is available and is the latest version:

You should see a message with a line similar to this, note that the version number might be different:

Output:

- Create the shared JWT secret. This JWT secret is used as a security mechanism that restricts who can call the execution client's RPC endpoint.

Click Check my progress to verify the objective.

Task 3. Start the Ethereum Execution and Consensus Clients

Ethereum has two clients: Geth - the execution layer, and Lighthouse - the consensus layer. They run in parallel with each other and work together. Geth will then establish an authrpc endpoint and port that Lighthouse will call. This endpoint is protected by a common security token saved locally. Lighthouse connects to Geth using the execution endpoint and security token.

For information on how Geth connects to the consensus client, read the Connecting to Consensus Clients documentation. For more information on how lighthouse connects to the execution client, take a look at the Merge Migration - Lighthouse Book documentation.

Start Geth

The following starts the Geth execution client.

- First, authenticate in gcloud. Inside the SSH session, run:

Press ENTER when you see the prompt Do you want to continue (Y/n)?

-

Navigate to the link displayed in a new tab.

-

Click on your active username (

), and click Allow. -

When you see the prompt Enter the following verification code in gcloud CLI on the machine you want to log into, click on the copy button then go back to the SSH session, and paste the code into the prompt Enter authorization code:.

-

Set the external IP address environment variable:

- Run the following command to start Geth as a background process. In this lab, you use the "snap" sync mode, which is a light node. To sync as a full node, use "full" as the sync mode. You can run this at the command line or save this to a .sh file first and then run it. You can also configure it to run as a service with systemd.

Click Check my progress to verify the objective.

- To see the process id, run this command:

- Check the logs to see if the process started correctly:

You should see a message similar to the one displayed in the output box below. The Geth client won't continue until it pairs with a consensus client.

Output:

- Enter Ctrl+C to break out of the log monitoring.

Start Lighthouse

Now, you'll start the lighthouse consensus client.

- Run the following command to launch lighthouse as a background process. You can run this at the command line or save this to a .sh file first and then run it. You can also configure it to run as a service with systemd.

- To see the process id, run the following command:

- Check the log file to see if the process started correctly. This may take a few minutes to show up:

You should see a message similar to the following:

Output:

-

Enter Ctrl+C to break out of the log monitoring.

-

Check the Geth log again to confirm that the logs are being generated correctly.

You should see a message similar to the one displayed in the output box below.

Output:

Verify node has been synced with the blockchain

Determine if the node is still syncing. It will take some time for the node to sync. (Note that you don't need to wait for the node to sync to complete the lab) There are two ways to find out the sync status: Geth and an RPC call.

- Run the following Geth command to check if the node is still syncing. Output of "false" means that it is synced with the network.

- At the Geth console execute:

You should see something similar to the following:

Output:

- Type exit to exit out of the Geth console.

- Run the following curl command to check if the node is still syncing. The command line tool ‘jq' will format the json output of the curl command. Output of "false" means that it is synced with the network.

Output:

- Run the following curl command to check if the node is accessible through the external IP address:

Output:

Task 4. Configure Cloud operations

Google Cloud has several operation services to manage your Ethereum node. This section walks through configuring Cloud Logging, Managed Prometheus, Cloud Monitoring and Cloud Alerts.

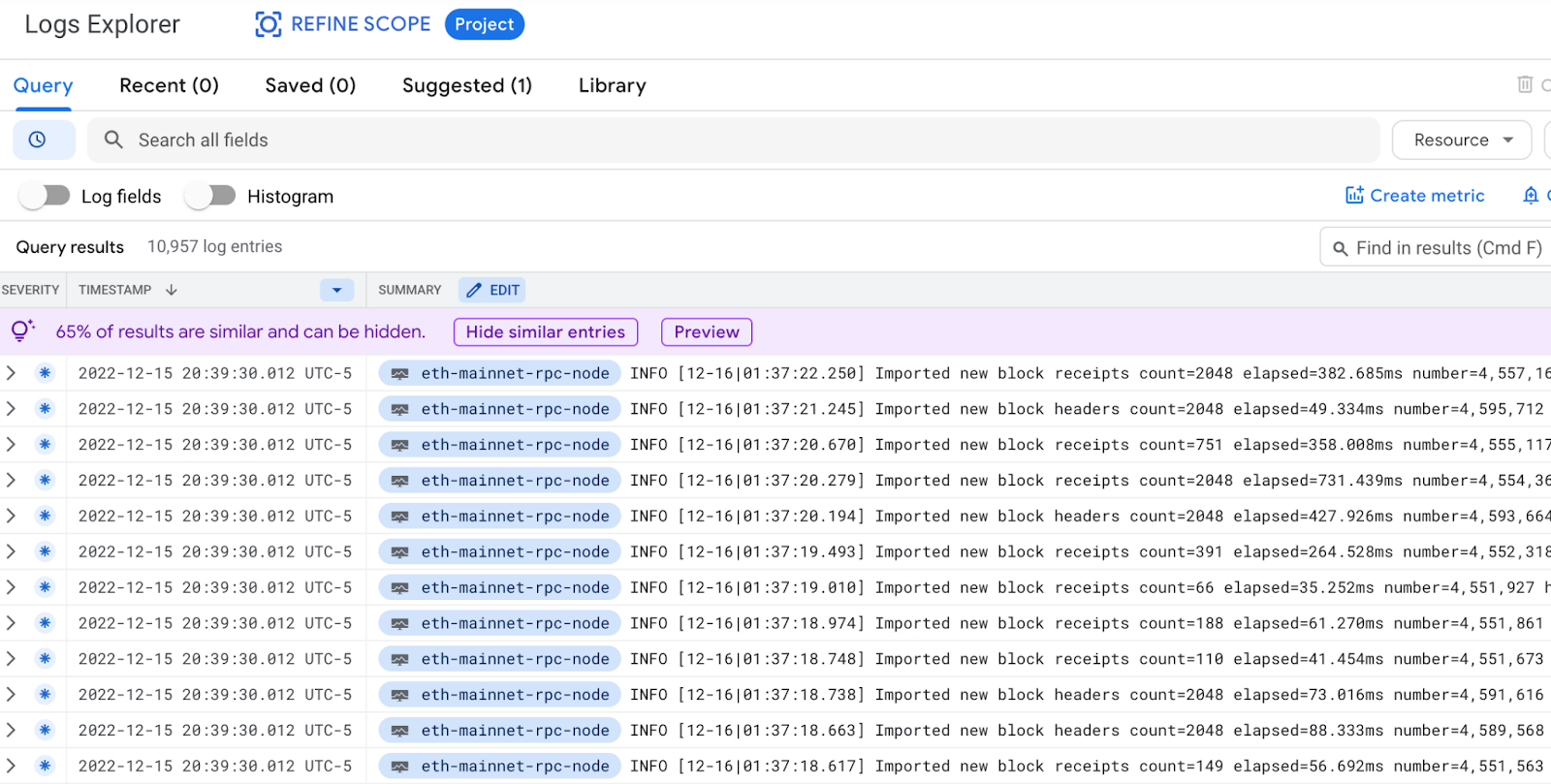

Configure Cloud logging

By default, Geth and Lighthouse will be logging to their declared log file. You'll want to bring the log data into Cloud Logging. Cloud Logging has powerful search capabilities and alerts can be created for specific log messages.

- Update permissions of the Cloud Ops config file so you can update it:

- Configure Cloud Ops agent to send log data to Cloud Logging. Update the file "/etc/google-cloud-ops-agent/config.yaml" to include this Ops Agent configuration. This config file defines the Geth and Lighthouse log files for import into Cloud Logging:

- After saving, run these commands to restart the agent and pick up the changes:

- Enter Ctrl+C to exit out of the status screen.

- If there is an error in the status, use this command to see more details:

- Check Cloud logging to confirm that log messages are appearing in the console. From the Navigation Menu, under the Logging section, click Logs Explorer. You should see messages similar to these:

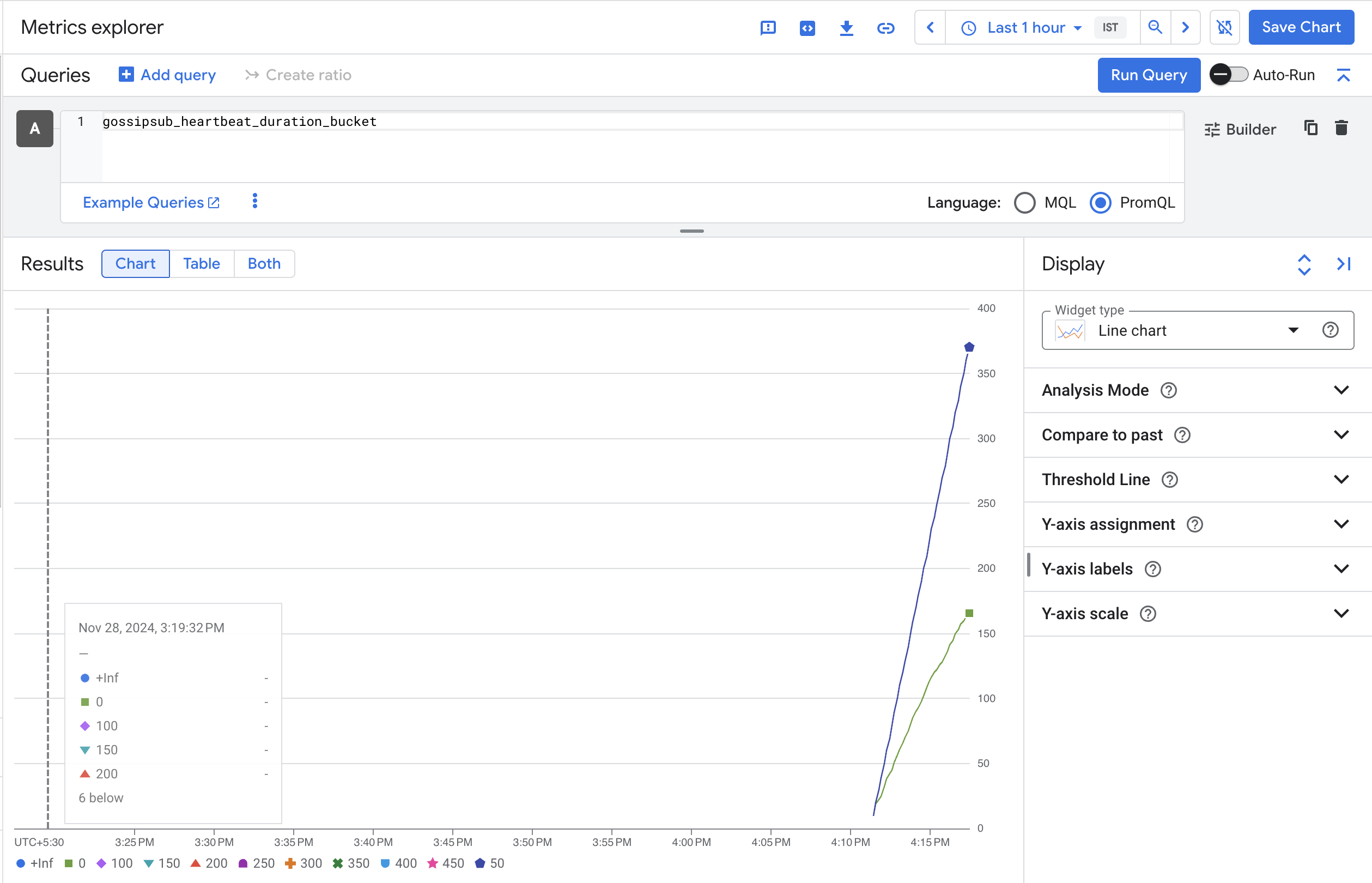

Configure Managed Prometheus

Since we started the geth and lighthouse clients with the --metrics flag, both clients will output metrics on http port. These metrics can be stored in a time series database like Prometheus and used to supply data to insightful grafana dashboards. Normally you would need to install Prometheus on the VM, but a small configuration in the Cloud Ops agent can capture the metrics and store them in the Managed Prometheus service in Google Cloud.

- On the command line of the VM, confirm the Geth metrics endpoint is active.

Output:

- On the command line of the VM, confirm the Lighthouse metrics endpoint is active.

Output:

- Configure Cloud Ops agent to send the metrics data to Managed Prometheus. Update the file "/etc/google-cloud-ops-agent/config.yaml" to include this Ops Agent configuration. This config file defines the Geth and Lighthouse metrics endpoint for import into Managed Prometheus:

- After saving, run these commands to restart the agent and pick up the changes:

- Enter Ctrl+C to exit out of the status screen.

- If there is an error in the status, use this command to see more details:

- Check Cloud logging to confirm that the metrics are appearing in the console. From the Navigation Menu, under the Monitoring section, click Metrics Explorer. Select the <> PromQL option. In the query box, enter a lighthouse metric

gossipsub_heartbeat_duration_bucket. Click RUN QUERY. You should see results similar to this:

You can do the same for a Geth metric (example rpc_duration_eth_blockNumber_success_count) to confirm that Geth metrics are shown.

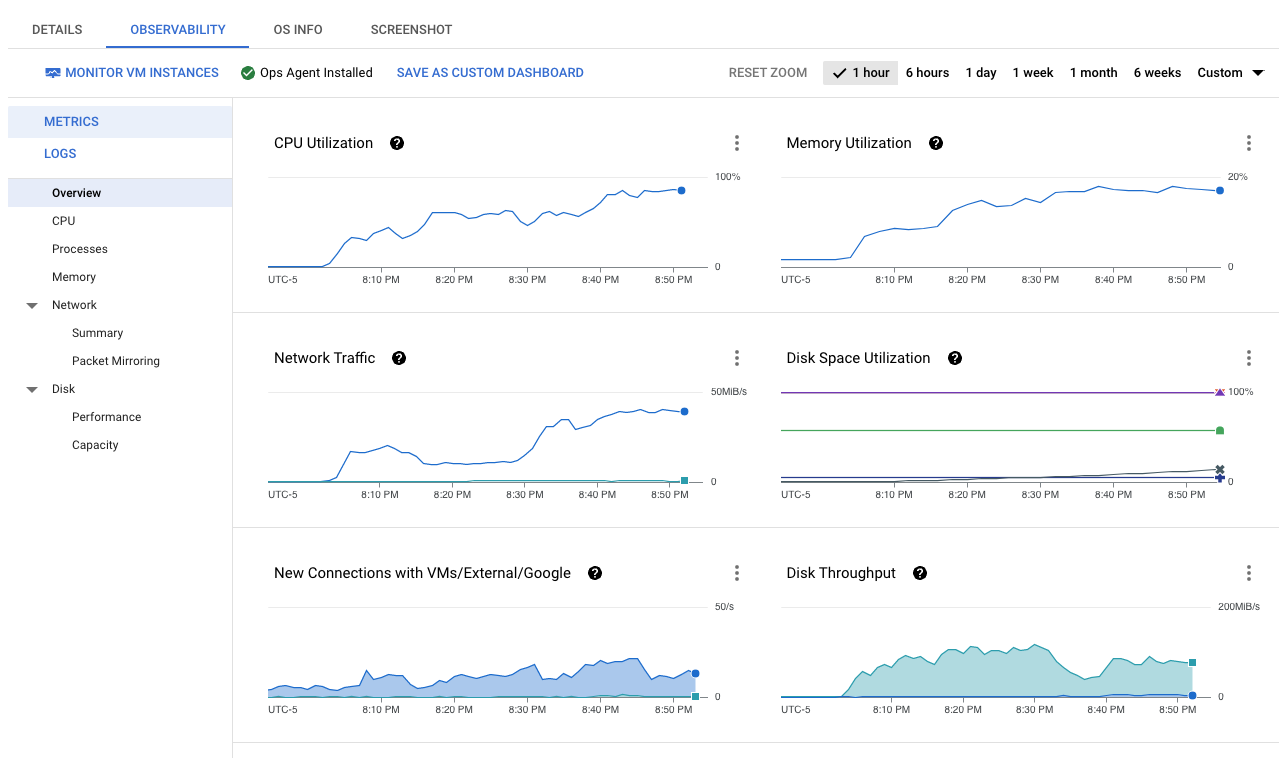

View Cloud monitoring

Cloud monitoring should already be active for your virtual machine.

- From the Navigation Menu, under the Compute Engine section, click VM Instances.

- Click on the VM eth-mainnet-rpc-node.

- Click on the tab OBSERVABILITY.

- All sections should be showing a graph of different metrics from the VM.

- Click around the different sub-menus and timeframes to check out the different types of metrics that are captured directly from the VM.

Configure notification channel

Configure a notification channel that alerts will be sent to:

- From the Navigation Menu, under the Monitoring section, click Alerting.

- Click EDIT NOTIFICATION CHANNELS.

- Under Email, click ADD NEW.

- Type in Email address and Display Name of the person who should receive the notifications.

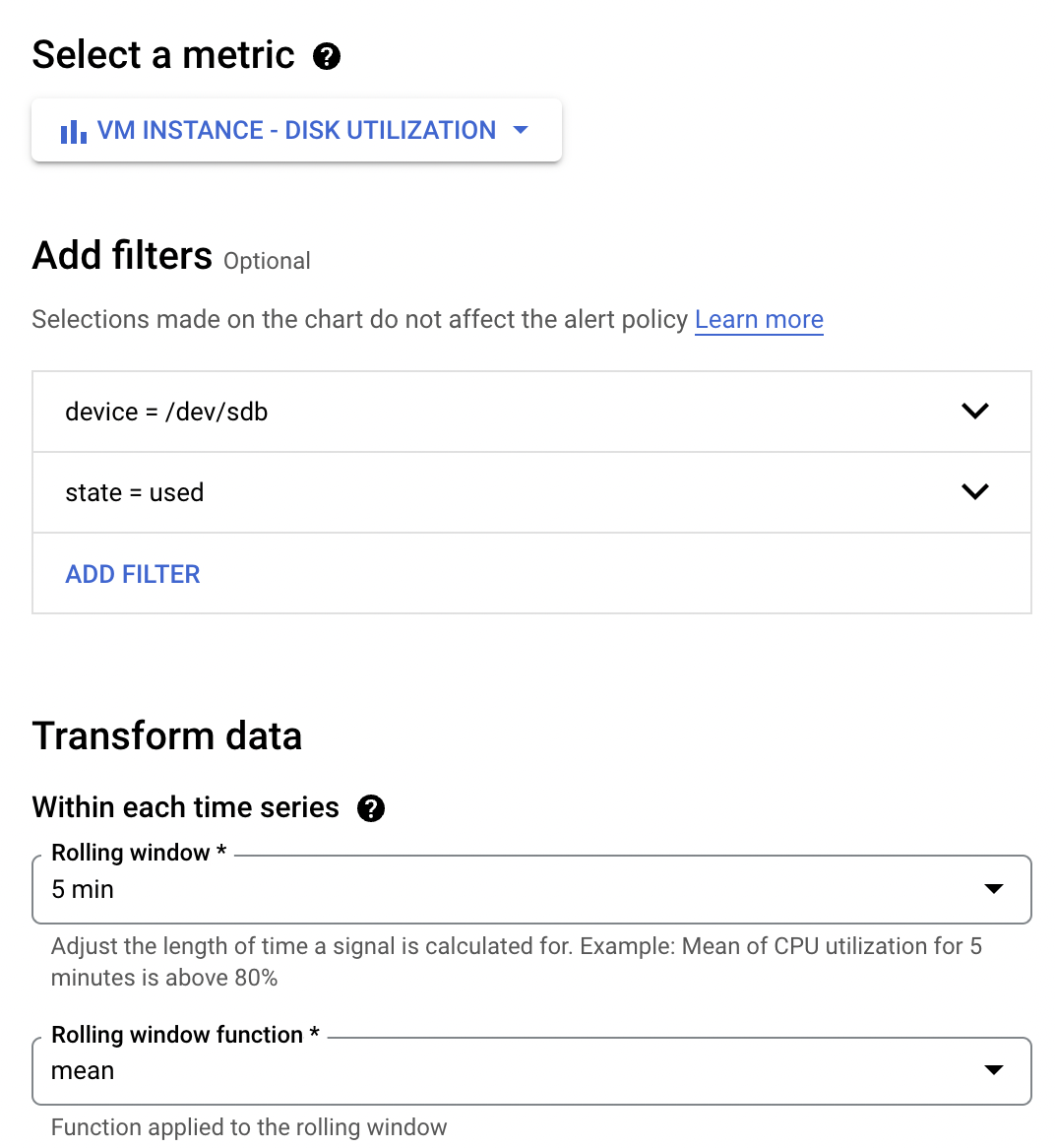

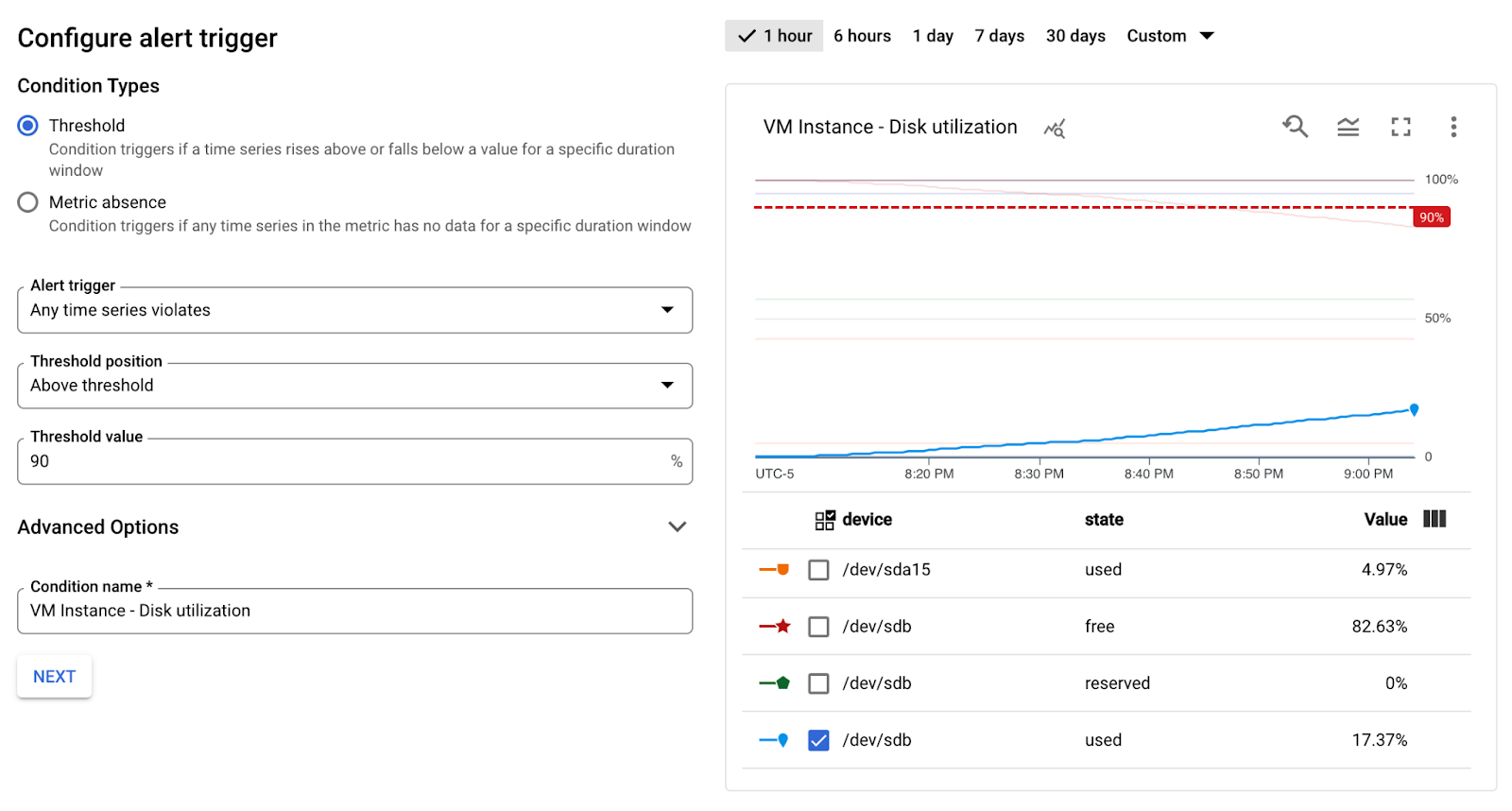

Configure metrics alerts

Configure alerts based on VM metrics:

- From the Navigation Menu, under the Monitoring section, click Alerting.

- Click CREATE POLICY.

- Click SELECT A METRIC.

- Click VM Instance > Disk > Disk Utilization and click Apply.

- Add filters:

| Property | Value (type or select) |

|---|---|

| device | /dev/sdb |

| state | used |

- Click NEXT.

- Enter the Threshold value: 90%

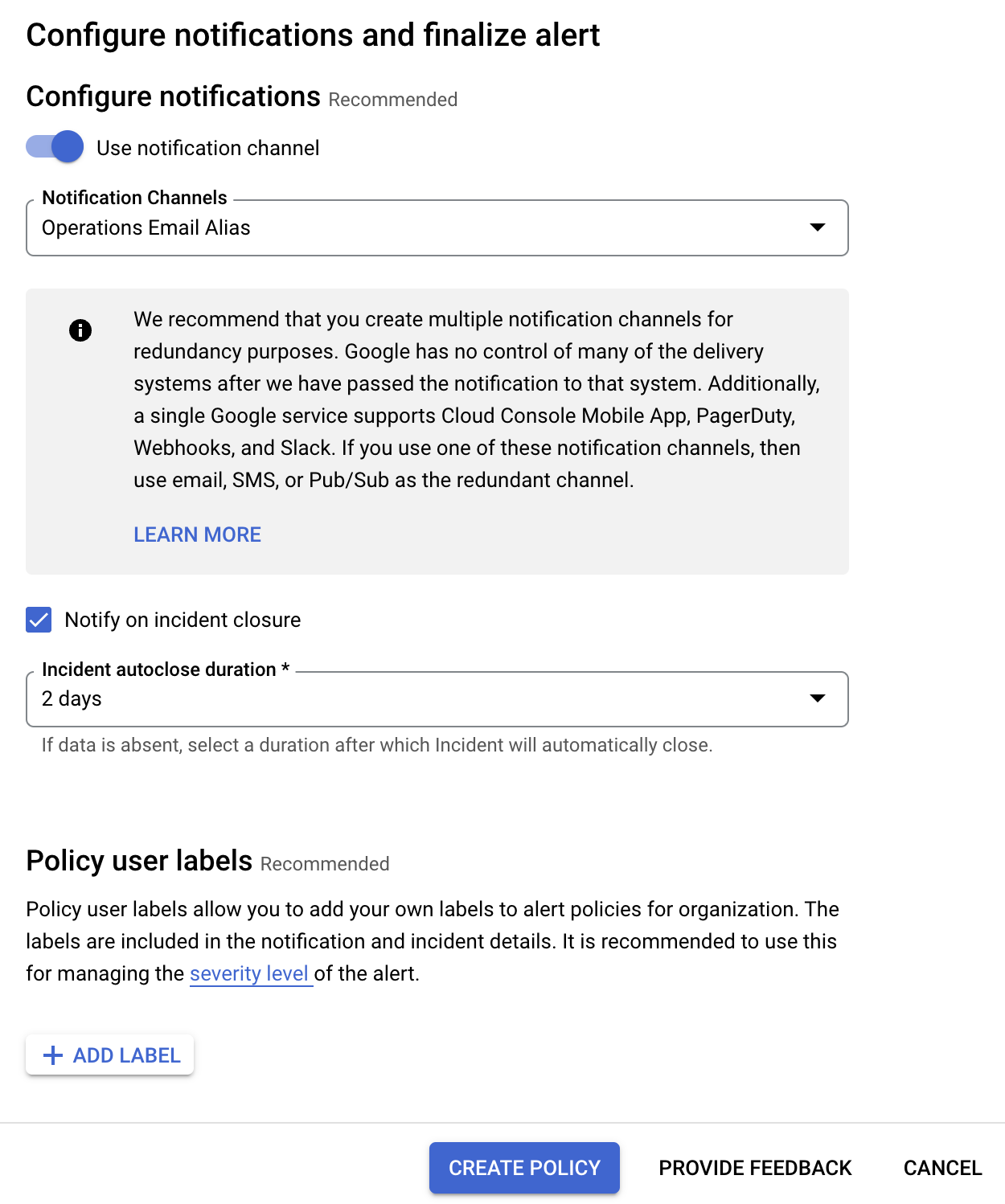

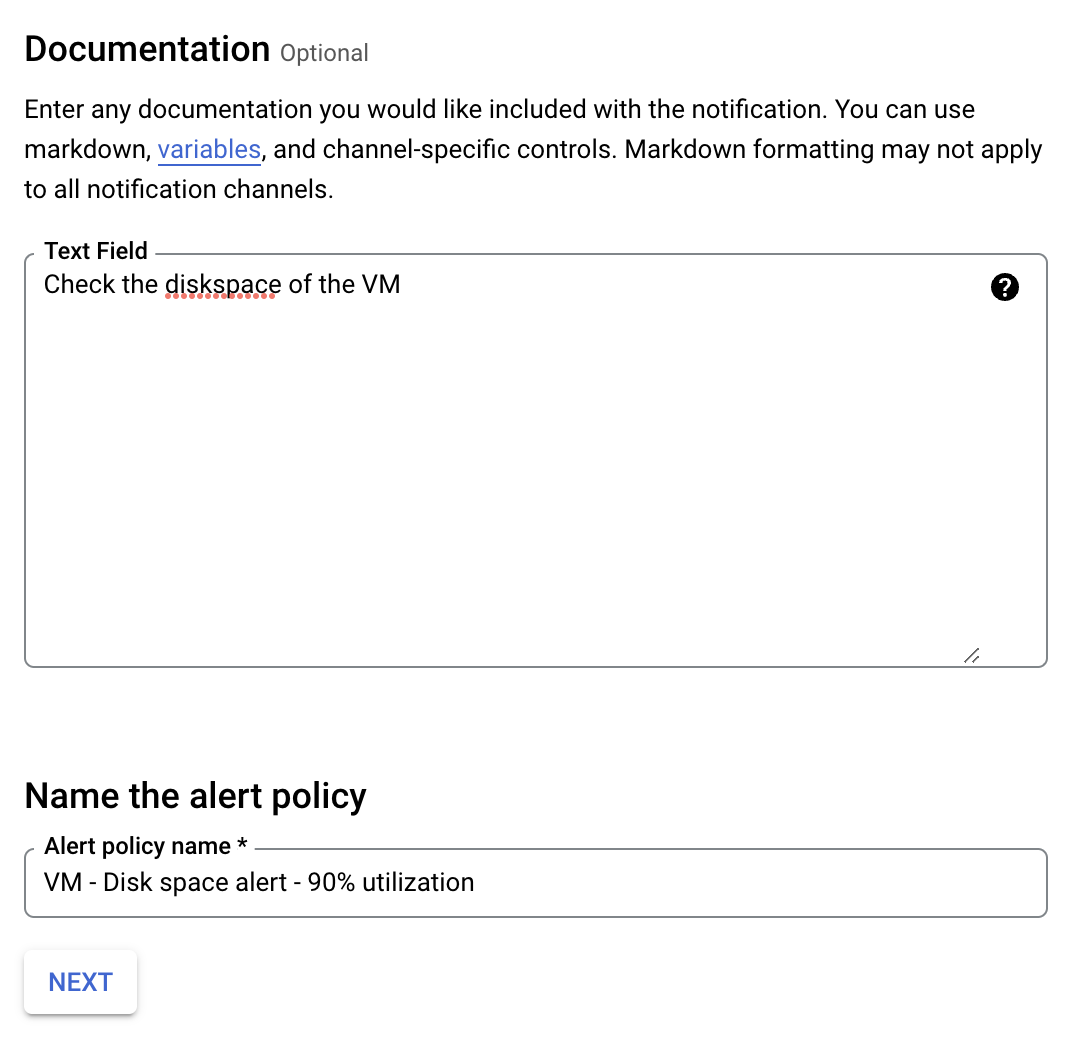

- Click NEXT, select the following values:

| Property |

Value (type or select) |

|---|---|

| Use notification channel | select |

| Notify on incident closure | check |

| Incident autoclose duration | 2 days |

| Documentation | Check the disk space of the VM |

| Name | VM - Disk space alert - 90% utilization |

- Click NEXT.

- Click CREATE POLICY.

Configure Uptime checks

Configure uptime checks for the HTTP endpoint:

- From the Navigation Menu, under the Monitoring section, click Uptime checks.

- Click CREATE UPTIME CHECK.

- Configure the uptime check with the following values:

| Property |

Value (type or select) |

|---|---|

| Protocol | HTTP |

| Resource Type | Instance |

| Applies to single: Instance | eth-mainnet-rpc-node |

| Path | / |

| Expand More target options | |

| Request Method | GET |

| Port | 8545 |

| Click Continue | accept defaults |

| Click Continue | accept defaults |

| Choose notification channel | Select notification channel created previously |

| Title | eth-mainnet-rpc-node-uptime-check |

- Click TEST (should show success of 200 OK).

- Click CREATE.

Click Check my progress to verify the objective.

Congratulations!

In this lab, you created a compute engine instance with a persistent disk, configured a static IP address, configured network firewall rules, scheduled backups, deployed Geth and Lighthouse Ethereum clients, tested the setup with Ethereum RPC calls, configured cloud logging and monitoring and configured uptime checks.

Next steps / learn more

Check out these resources to continue your Ethereum journey:

- To learn more about Ethereum client (execution layer), refer to Geth.

- To learn more about Ethereum client (consensus layer), refer to Lighthouse.

- To learn more about Ethereum in general, refer to Ethereum.

- To learn more about Google Cloud for Web3, refer to the Google Cloud for Web3 website.

- To learn more about Blockchain Node Engine for Google Cloud, refer to the Blockchain Node Engine page.

Google Cloud training and certification

...helps you make the most of Google Cloud technologies. Our classes include technical skills and best practices to help you get up to speed quickly and continue your learning journey. We offer fundamental to advanced level training, with on-demand, live, and virtual options to suit your busy schedule. Certifications help you validate and prove your skill and expertise in Google Cloud technologies.

Manual Last Updated December 26, 2024

Lab Last Tested December 26, 2024

Copyright 2025 Google LLC All rights reserved. Google and the Google logo are trademarks of Google LLC. All other company and product names may be trademarks of the respective companies with which they are associated.